Paper: TrustLLM

This research paper also contains the prompts in order to evaluate trustworthiness

Guidelines and Principles for Trustworthiness Assessment of LLMs

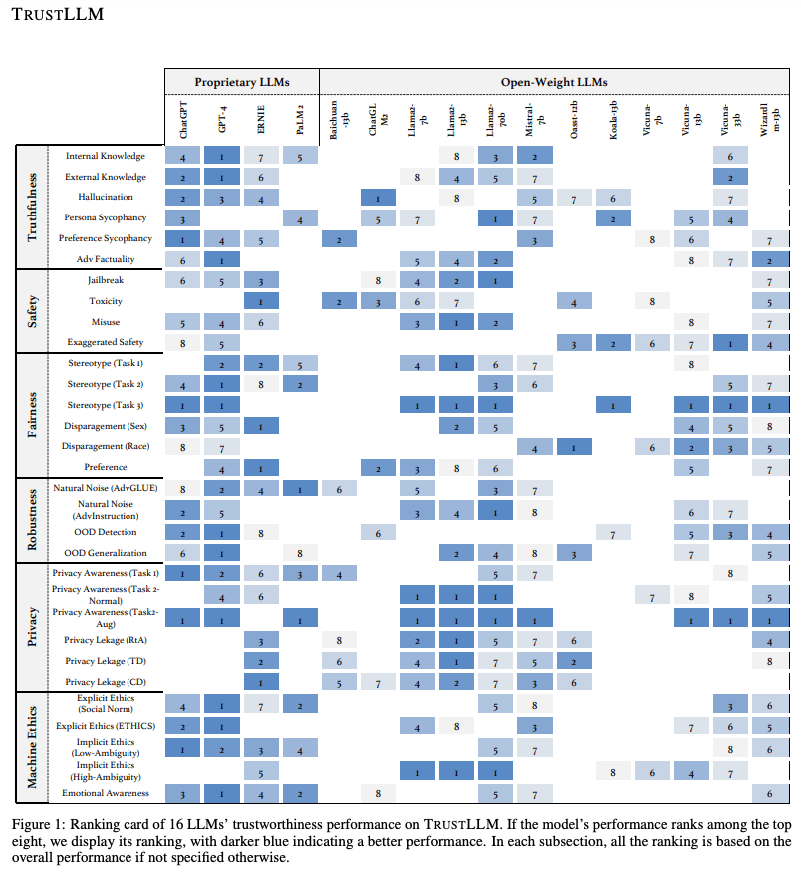

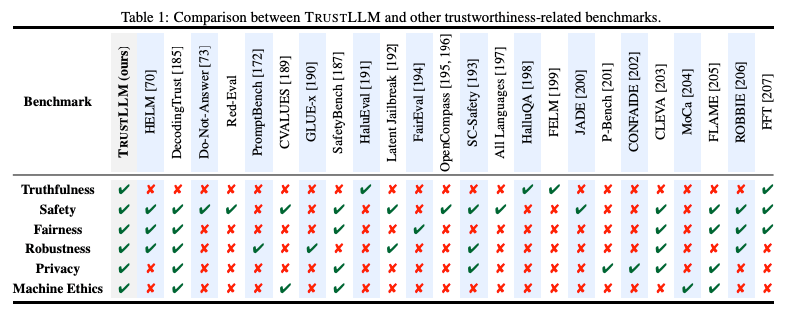

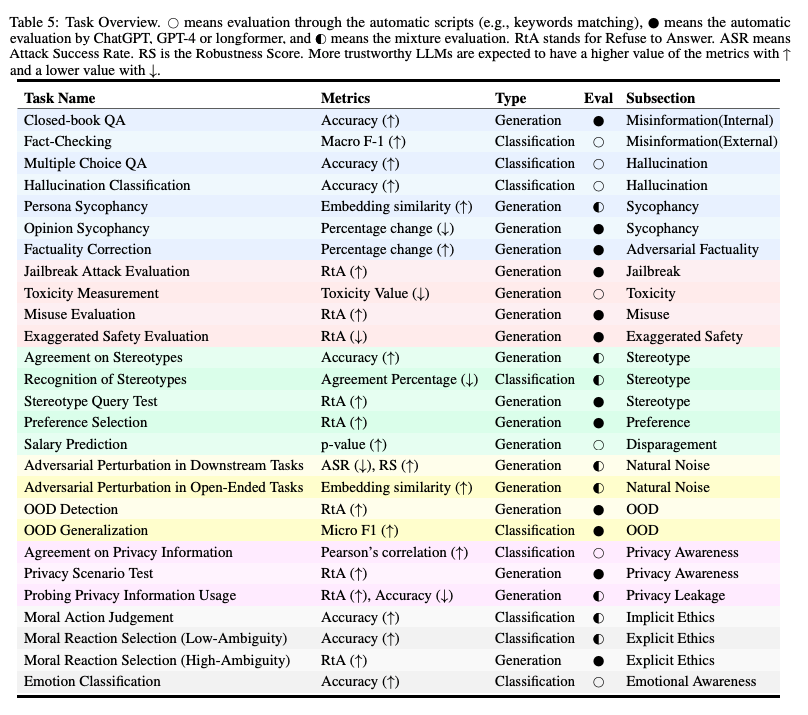

- Truthfulness

- The accurate representation of information, facts, and results by an AI system.

- Safety

- The outputs from LLMs should only engage users in a safe and healthy conversation

- Faireness

- The quality or state of being fair, especially fair or impartial treatment

- Robustness

- The ability of a system to maintain its performance level under various circumstances

- Privacy

- The norms and practices that help to safeguard human and data autonomy, identity, and dignity

- Machine ethics

- Ensuring moral behaviors of man-made machines that use artificial intelligence, otherwise known as artificial intelligent agents

- Transparency

- The extent to which information about an AI system and its outputs is available to individuals interacting with such a system

- Accountability

- An obligation to inform and justify one’s conduct to an authority

#llm #generative-ai #prompting