Compositionality Gap - describe the fraction of compositional questions that the model answers incorrectly out of all the compositional questions for which the model answers the sub-questions correctly.

Compositional questions require more computation and knowledge retrieval than 1-hop ones; however, by using naive prompting (which expects the answer to be output immediately after the question), we always give the model approximately the same number of steps to answer questions.

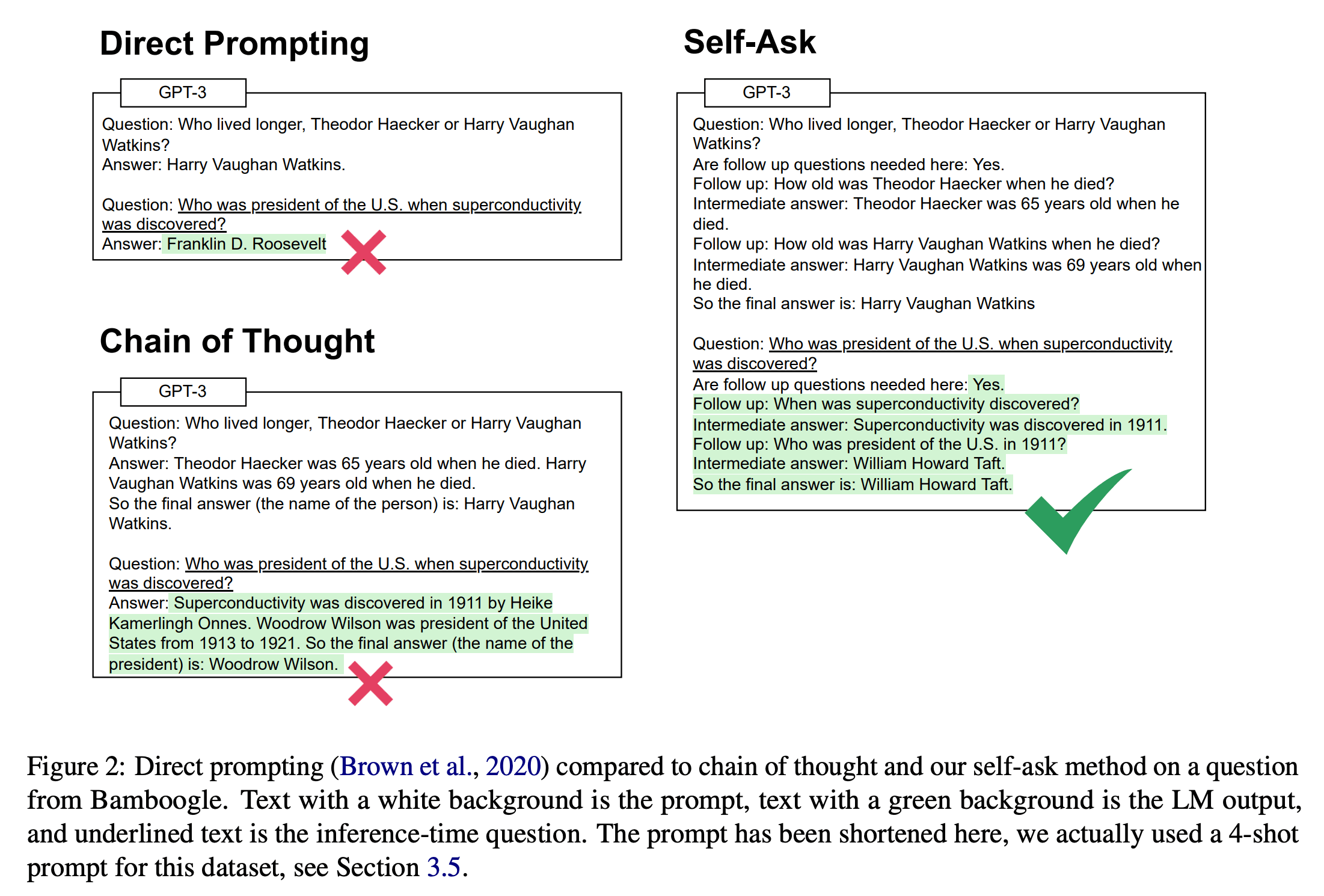

Self-ask

Prompt LLM to ask itself whether need to ask a follow up question or not, and what is that follow up question. Leverage the findings of Chain-of-Thought.

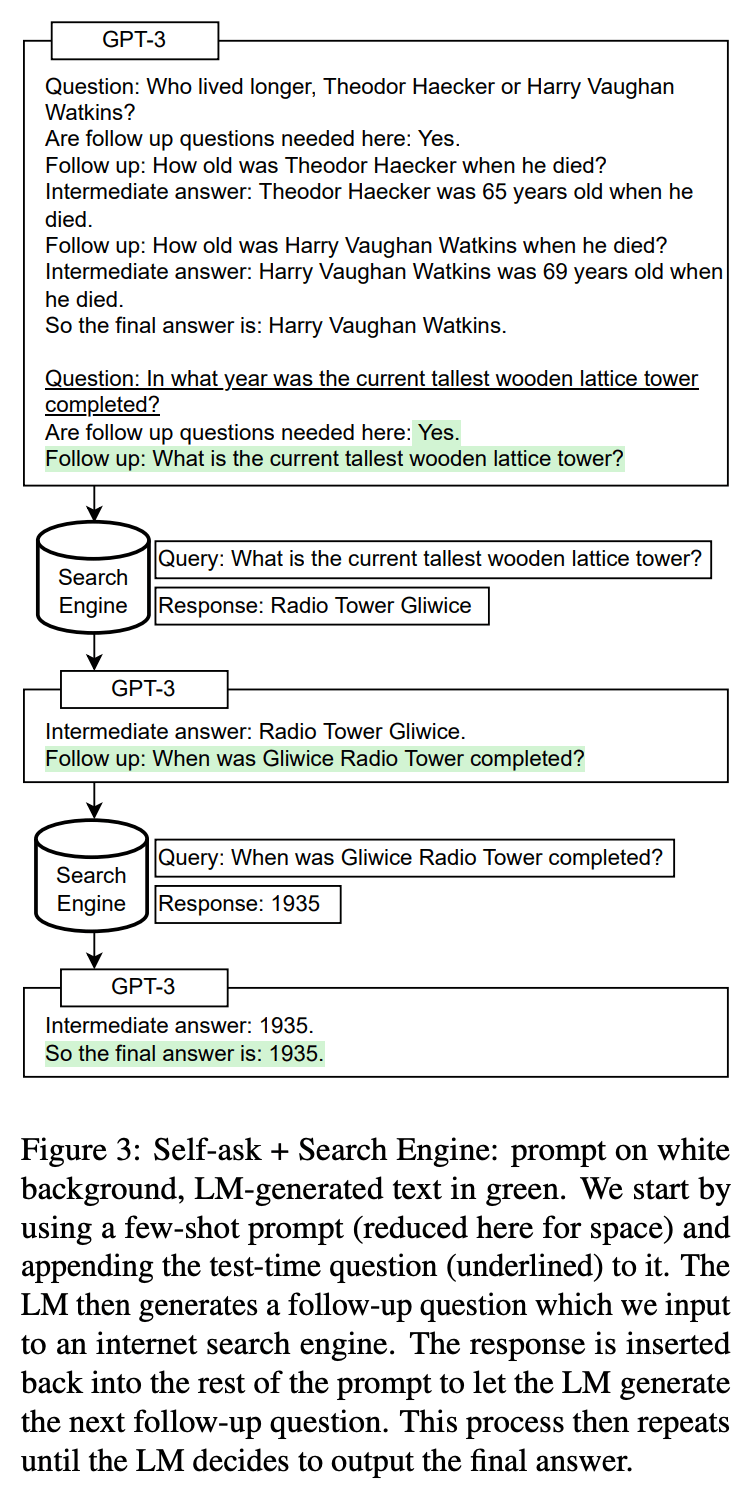

Improving Self-ask with a Search Engine

You may interested

References

#llm #prompting #rag